Digital Identity in age of AI

How do we build a pseudonymous identity system in times of AI?

Considerations for such systems are: users should own their identities (not corporations), users can interact across different networks (in a privacy-preserving manner) and the network should not be overtaken by malicious actors.

Online networks are prone to attacks defrauding existing users or creating fake accounts to spread misinformation. This is an age-old problem.

Sybil resistance has been a research topic since the advent of the internet and online networks. Current approaches combine to give us a good enough solution to the problem.

With the introduction of broad generative AI tools, the problem multiplies.

The barrier to creating new accounts is dropping, “deep fakes” are about to become cheaper than “mechanical turks”. The defence spam filters provide against bot-generated content is likely to fail soon.

A recent example of deep fakes breaking the liveness test.

How can we distinguish humans from a machine?

Current techniques applicable for different use cases are described below:

Reverse Turing Test presents AI-hard problems as a challenge (e.g. Captcha) to users. Newer tests such as the "FLIP" test work on human-generated questions instead of algorithmic challenges.

Pseudonymous Party is a physical or virtual gathering for elaborate authentication through "liveness".

Intersectional Identity bridges formal verification and informal mechanisms to confirm identity-related claims. It extends the “Web of Trust” by including markers such as education, location, work etc., intersecting with real-world activities.

Web of Trust consists of identity certificates validated through the digital signatures of other users. This is an OG approach and base for many techniques since the 90s.

Token Curated Registries are incentive systems designed to replace list owners by creating economic incentives for decentralised list curation. Registry members hold tokens, which increase in value if the quality, legitimacy and popularity of the list increases. This incentivises good behaviour to be on the list.

Decentralised Autonomous Organisations (DAOs) need human decision-making in their functioning. Their activities model 'human entropy', observable on-chain and serve as a meaningful substrate for Proof of Personhood solutions.

Segregating humans from machines sometimes requires certainty of uniqueness.

The unique-human problem: Proof-of-personhood

Proof-of-personhood is finding a way to assert that a given registered account is controlled by a real person (and a different real person from every other registered account), ideally without revealing which real person it is - Vitalik

Current initiatives use a combination of these approaches. e.g. Aadhar, Bright ID, Circles, Idena, Proof of Humanity, Worldcoin

Such systems allow one-person-one-vote semantics. Incentive mechanisms that reward good behaviour and promote anti-plutocracy. UBI (universal basic income) token is an approach that most projects use today.

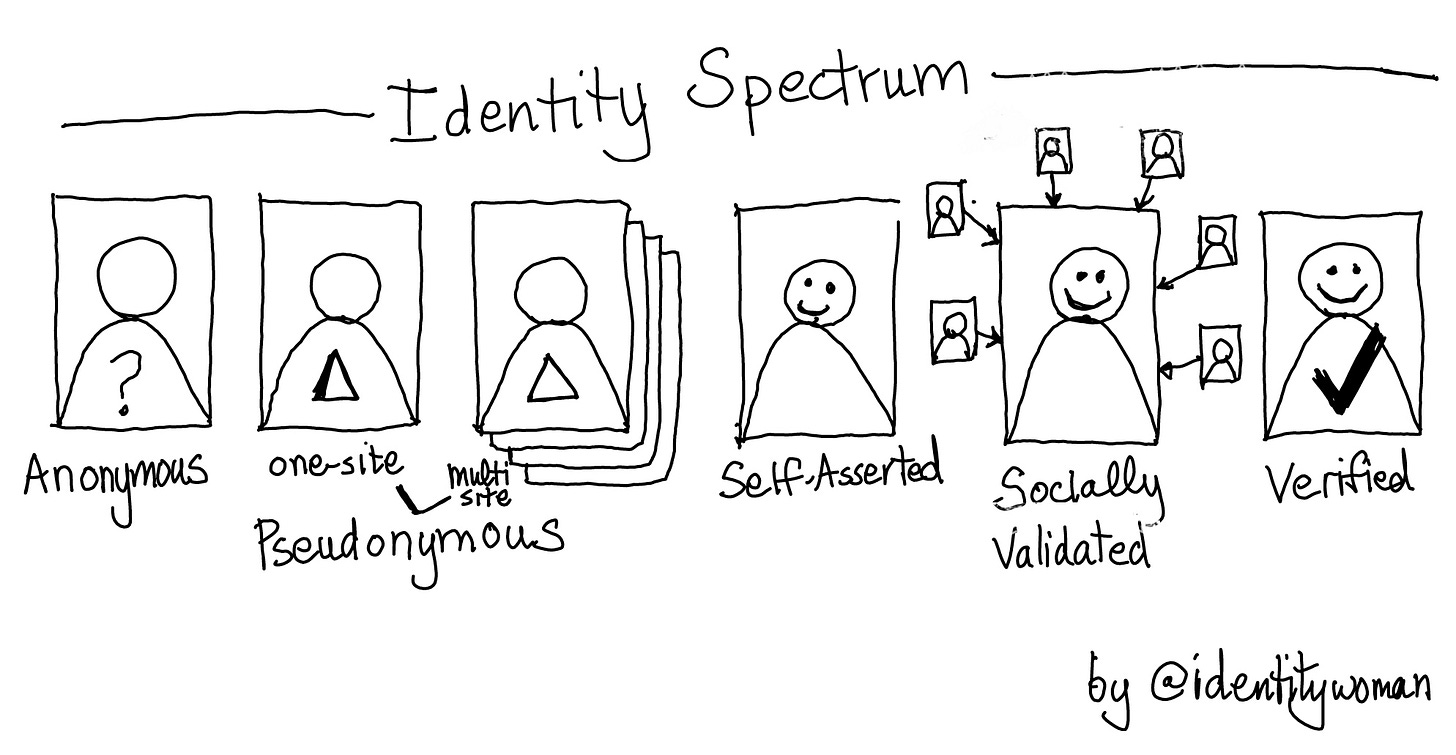

The Identity Spectrum

Users play different roles in online ecosystems, through various personas.

On Reddit and other pseudonymous networks, reputation is built by contributing, engaging and supporting the community. Different guarantees are required to take part in different networks.

Proof-of-personhood is important to verify that participating users are unique (tied to real-world identity).

Biometric proof-of-personhood at scale can inhibit privacy, or be too opaque or centralised. Users must be free to be pseudonymous or anonymous, or self-assert or socially confirm their identity, in networks that do not need uniqueness.

“A social-graph-based system bootstrapped off tens of millions of biometric ID holders, however, could actually work.” - Vitalik.

A social-graph based approach nuances identity in various contexts, acting as a middle layer between biometric (and other) identity systems and the user’s application layer.

At Shovel, we are building a user-controlled data store and social graph protocol to enable privacy-preserving trust systems for use cases requiring verified identity.